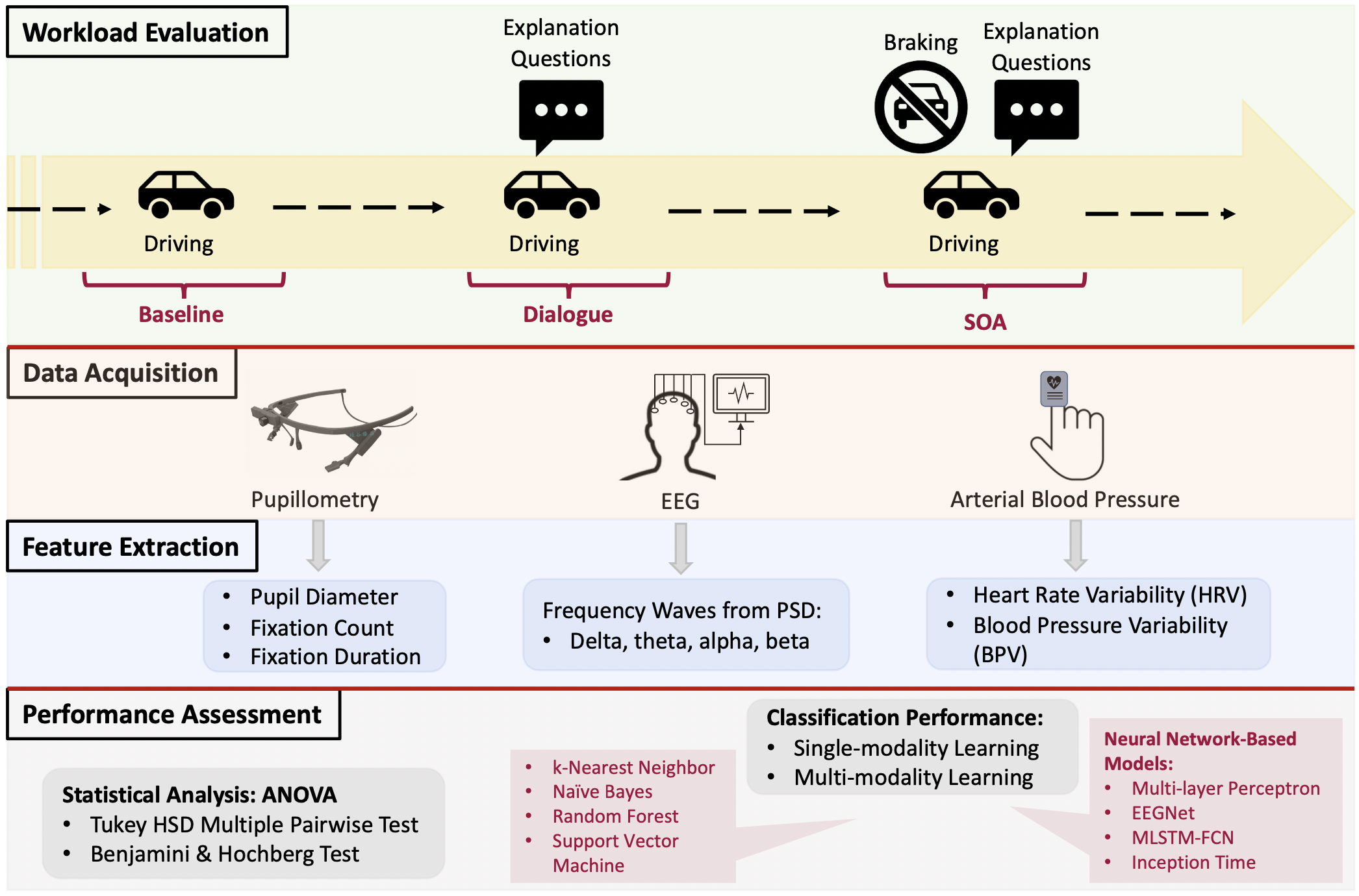

We analyzed and modeled data from a multi-modal simulated driving study specifically designed to evaluate different levels of cognitive workload induced by various secondary tasks such as dialogue interactions and braking events in addition to the primary driving task. Our analyses provide evidence for eye gaze being the best physiological indicator of human cognitive workload, even when multiple signals like eeg, aterial blood pressure, and so on are Our findings are important for future efforts of real-time workload estimation in the multimodal human-robot interactive systems given that eye gaze is easy to collect and process and less susceptible to noise artifacts compared to other physiological signal modalities.

@article{aygunetal22sensors,

title={Investigating Methods for Cognitive Workload Estimation for Assistive Robots},

author={Aygun, Ayca and Nguyen, Thuan and Haga, Zachary and Aeron, Shuchin and Scheutz, Matthias},

year={2022},

journal={Sensors},

publisher={MDPI},

volume={22},

pages={6834}

url={https://hrilab.tufts.edu/publications/aygunetal22sensors.pdf}

}